It’s Time to Regulate the Internet

The defining fact of digital life is that the web was created in the libertarian frenzy of the...

Franklin Foer TECHNOLOGY

It will be fantastically satisfying to see the boy genius flayed. All the politicians—ironically, in search of a viral moment—will lash Mark Zuckerberg from across the hearing room. They will corner Facebook’s founding bro, seeking to pin all manner of sin on him. This will make for scrumptious spectacle, but spectacle is a vacuous substitute for policy.

As Facebook’s scandals have unfolded, the backlash against Big Tech has accelerated at a dizzying pace. Anger, however, has outpaced thinking. The most fully drawn and enthusiastically backed proposal now circulating through Congress would regulate political ads that can appear on the platform, a law that hardly curbs the company’s power or profits. And, it should be said, a law that does nothing to attack the core of the problem: the absence of governmental protections for personal data.

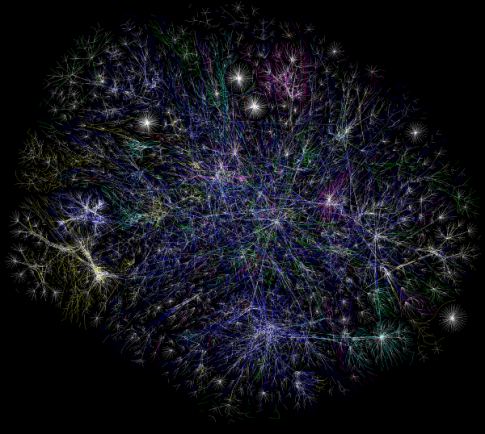

The defining fact of digital life is that the web was created in the libertarian frenzy of the 1990s. As we privatized the net, releasing it from the hands of the government agencies that cultivated it, we suspended our inherited civic instincts. Instead of treating the web like the financial system or aviation or agriculture, we refrained from creating the robust rules that would ensure safety and enforce our constitutional values.

This weakness has long been apparent to activists toiling on the fringes of debate—and the dangers might even have been apparent to most users of Facebook. But it’s one thing to abstractly understand the rampant exploitation of data; it’s another to graphically see how our data can be weaponized against us. And that’s the awakening occasioned by the rolling revelation of Facebook’s complicity in the debacle of the last presidential campaign. The fact that Facebook seems unwilling to fully own up to its role casts further suspicion on its motives and methods. And in the course of watching the horrific reports, the public may soon arrive at the realization that it is the weakness of our laws that has provided the basis for Facebook’s tremendous success.

If we step back, we can see it clearly: Facebook’s business model is the evisceration of privacy. That is, it aims to adduce its users into sharing personal information—what the company has called “radical transparency”—and then aims to surveil users to generate the insights that will keep them “engaged” on its site and to precisely target them with ads. Although Mark Zuckerberg will nod in the direction of privacy, he has been candid about his true feelings. In 2010 he said, for instance, that privacy is no longer a “social norm.” (Once upon a time, in a fit of juvenile triumphalism, he even called people “dumb fucks” for trusting him with their data.) And executives in the company seem to understand the consequence of their apparatus. When I recently sat on a panel with a representative of Facebook, he admitted that he hadn’t used the site for years because he was concerned with protecting himself against invasive forces.

We need to constantly recall this ideological indifference to privacy, because there should be nothing shocking about the carelessness revealed in the Cambridge Analytica episode. Facebook apparently had no qualms about handing over access to your data to the charlatans working on behalf Cambridge Analytica—expending nary a moment’s time vetting them or worrying about whatever ulterior motives they might have had for collecting so much sensitive information. This wasn’t an isolated incident. Facebook gave away access to data harvesters as part of a devil’s bargain with third-party app developers. The company needed relationships with these developers, because their applications lured users to spend ever more time on Facebook. As my colleague Alexis Madrigal has written, Facebook maintained lax standards for the harvest of data, even in the face of critics who stridently voiced concerns.

Mark Zuckerberg might believe that world is better without privacy. But we can finally see the costs of his vision. Our intimate information was widely available to malicious individuals, who hope to manipulate our political opinions, our intellectual habits, and our patterns of consumption; it was easily available to the proprietors of Cambridge Analytica. Facebook turned data—which amounts to an X-ray of the inner self—into a commodity traded without our knowledge.

In the face of such exploitative forces, Americans have historically asked government to shield them. The law protects us from banks that would abuse our ignorance and human weaknesses—and it precludes the commodification of our financial data. When manufacturers of processed food have stuffed their products with terrible ingredients, the government has forced them into transparently revealing the full list of components. After we created transportation systems, the government insisted on speed limits and seat belts. There are loopholes in all of this, but there’s an unassailable consensus that these rules are far better than the alternative. We need to extend our historic model to our new world.

Fortunately, we don’t need to legislate in the dark. In May, the European Union will begin to enforce a body of laws that it calls the General Data Protection Regulation. Over time, European nations had created their own agencies and limits on the excesses of the tech companies. But this forthcoming regime creates a single standard for the entirety of the EU—a massive and largely laudatory effort to force the tech companies to clearly explain how they intend to use the personal information that they collect. It gives citizens greater powers to restrict the exploitation of data, including the right to erase data.

The time has arrived for the United States to create its own regulatory infrastructure, designed to accord with our own values and traditions—a Data Protection Authority. That moniker, as I wrote in my book World Without Mind, contains an intentional echo of the governmental bureau that enforces environmental safeguards. There’s a parallel between the environment and privacy. Both are goods that the unimpeded market would ruin. Indeed, we let business degrade the air, waters, and forests. Yet we also impose crucial constraints on environmental exploitation for commercial gain, and we need the same for privacy. As in Europe, citizens should have the right to purge data that sits on pack-rat servers. Companies should be required to set default options so that citizens have to affirmatively opt for surveillance rather than passively accept the loss of privacy.

Of course, creating a Data Protection Authority would introduce a dense thicket of questions. And there’s a very reasonable fear that the rich and powerful will attempt to leverage these rules in order to squash journalism that they dislike. Thankfully, we have a more press-friendly body of jurisprudence than the Europeans—and we can script our law to robustly protect reportage. Besides, the status quo poses far graver risks to democratic values. Once privacy disappears, it can never be repaired. As demagogues exploit the weaknesses in our system, our political norms might not recover.

When Cambridge Analytica deployed an app to bamboozle Facebook users into surrendering data, it cracked a fiendish joke. It gave its stalking horse creation a name—and it was a name that seemed to acknowledge a vast and terrible exploitation. The company called its product thisisyourdigitallife. If we can move beyond rhetorical venting, it needn’t be./Atlantic

del.icio.us

del.icio.us Digg

Digg

Post your comment