Online conspiraci groups are a lot like cults

In the era of social networks, the bubble has expanded...

Renee Diresta

IN RECENT MONTHS there’s been an increase in stories in which a follower of radical conspiracies shifts their actions from the web and into the world.

In June, a QAnon conspiracy follower kicked off a one-man standoff at the Hoover Dam in Nevada. Another QAnon supporter was arrested the next month occupying a Cemex cement factory, claiming that he had knowledge that Cemex was secretly assisting in child trafficking—a theory discussed in Facebook groups, in an attempt to push it into Twitter trending topics.

WhatsApp conspiracy theories about pedophiles in India led to murders. Investigations of Cesar Sayoc, dubbed the “Magabomber,” turned up his participation in conspiratorial Facebook groups. There’s the Tree of Life synagogue shooter. The Comet Ping Pong “Pizzagate” shooter. There are, unfortunately, plenty of examples.

Of course it’s expected that people who commit acts of aggression will also have social media profiles. And most people who believe in a conspiracy are not prone to violence. But these social media profiles bear another common thread: evidence that the perpetrators’ radicalization happened within an online conspiracy group.

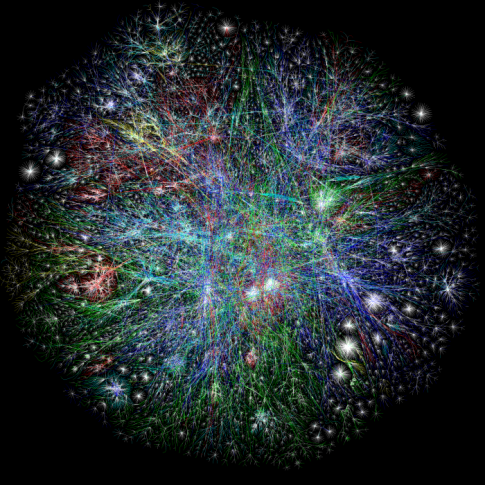

We used to worry about filter bubbles, which accidentally trap users in a certain sphere of information. In the era of social networks, the bubble has expanded: People can easily become enmeshed in online communities that operate with their own media, facts, and norms, in which outside voices are actively discredited. Professor C. Thi Nguyen of Utah Valley University refers to these places as echo chambers. “An epistemic bubble is when you don’t hear people from the other side,” he writes. “An echo chamber is what happens when you don’t trust people from the other side.”

There are some common pathways reported by people who fall into, and then leave these communities. They usually report that their initial exposure started with a question, and that a search engine took them to content that they found compelling. They engaged with the content and then found more. They joined a few groups, and soon a recommendation engine sent them others. They alienated old friends but made new ones in the groups, chatted regularly about their research, built communities, and eventually recruited other people.

“When you met an ignorant nonbeliever, you sent them YouTube videos of excessively protracted contrails and told them things like: 'Look at the sky! It's obvious!'" Stephanie Wittis, a self-described former chemtrails and Illuminati conspiracy believer, told Vice. “You don't even go into detail about the matter or the technical inconsistencies, you just give them any explanation that sounds reasonable, cohesive, and informed—in a word, scientific. And then you give them the time to think about it."

This behavior resembles another, older phenomenon: It’s strikingly similar to cult recruitment tactics of the pre-internet era, in which recruits are targeted and then increasingly isolated from the noncult world. “The easiest way to radicalize someone is to permanently warp their view of reality,” says Mike Caulfield, head of the American Association of State Colleges and Universities digital polarization initiative. “It’s not just confirmation bias ... we see people moving step by step into alternate realities. They start off questioning and then they’re led down the path.”

The path takes them into closed online communities, where members are unlikely to have real-world connections but are bound by shared beliefs. Some of these groups, such as the QAnon communities, number in the tens of thousands. “What a movement such as QAnon has going for it, and why it will catch on like wildfire, is that it makes people feel connected to something important that other people don’t yet know about,” says cult expert Rachel Bernstein, who specializes in recovery therapy. "All cults will provide this feeling of being special.”

The idea that “more speech” will counter these ideas fundamentally misunderstands the dynamic of these online spaces: Everyone else in the group is also part of the true believer community. Information does not need to travel very far to reach every member of the group. What’s shared conforms to the alignment of all of the members, which reinforces the group's worldview. Inside Cult 2.0, dissent is likely to be met with hostility, doxing, and harassment. There is no counterspeech. There is no one in there who’s going to report radicalization to the Trust and Safety mods.

Online radicalization is now a factor in many destructive and egregious crimes, and the need to understand it is gaining in urgency. Digital researchers and product designers have a lot to learn from looking at deprogramming and counterradicalization work done by psychologists. “When people get involved in a movement, collectively, what they’re saying is they want to be connected to each other,” Bernstein adds. “They want to have exclusive access to secret information other people don’t have, information they believe the powers that be are keeping from the masses, because it makes them feel protected and empowered. They’re a step ahead of those in society who remain willfully blind. This creates feeling similar to a drug—it’s its own high.”

This conviction largely inures members to correction, which is a problem for the fact-checking initiatives that platforms are focused on. When Facebook tried adding fact-checking to misinformation, researchers found, counterintuitively, that people doubled down and shared the article more when it was disputed. They don’t want you to know, readers claimed, alleging that Facebook was trying to censor controversial knowledge.

YouTube is still trying; it’s recently begun adding links to Wikipedia entries that debunk videos pushing popular conspiracy theories. In March, YouTube CEO Susan Wojcicki told WIRED that the site included entries to the internet conspiracies that had the most active discussion. (Perhaps she’s operating under the premise that believers in a vast globalist conspiracy just haven’t read the Wikipedia article about the Holocaust.) The social platforms are still behaving as if they don’t understand the dynamics at play, despite the fact that researchers have been explaining them for years.

So what does work? One-on-one interventions and messages from people within these trusted networks. These consistently have the greatest impact in deradicalization. Since that’s very difficult to scale online, experts look to prevent people from becoming radicalized, either by inoculating communities or by attempting to intercede as early as possible in the radicalization process. But this requires companies to fundamentally shift their recommendation process, and redirect certain users away from what they wish to see. It requires the platforms to challenge their own search engines—and perhaps to make judgement calls about the potential harm that comes from certain types of content.

Previously, the platforms have only been willing to undertake this thorny evaluation after extensive public pressure and government pleas, and only in cases of explicit terrorist radicalization. To counter violent terrorist threats like ISIS, YouTube ran a program called Project Redirect, which used ad placement to suggest counter-channels to those searching for ISIS propaganda.

But companies are reluctant to apply these strategic nudges when the radicalization process is just a touch grayer. We’re increasingly aware of how social media pushes users into echo chambers online. We’re seeing more and more researchers and ex-conspiracists alike describe these dynamics using terms like “cult.” We’re seeing more violent acts traced back to online radicalization. When features designed to bring us together instead undermine society and drive us apart, it is past time for change./Wired

del.icio.us

del.icio.us Digg

Digg

Post your comment